orcharhino Architecture

1. Introduction to orcharhino

This guide contains information about features provided by the Katello plug-in. If you do not plan to install the Katello plug-in, ignore these references.

orcharhino is a system management solution that enables you to deploy, configure, and maintain your systems across physical, virtual, and cloud environments. orcharhino provides provisioning, remote management and monitoring of multiple Enterprise Linux deployments with a single, centralized tool. orcharhino Server synchronizes the content from Red Hat Customer Portal and other sources, and provides functionality including fine-grained life cycle management, user and group role-based access control, integrated subscription management, as well as advanced GUI, CLI, or API access.

orcharhino Proxy mirrors content from orcharhino Server to facilitate content federation across various geographical locations. Host systems can pull content and configuration from orcharhino Proxy in their location and not from the central orcharhino Server. orcharhino Proxy also provides localized services such as Puppet server, DHCP, DNS, or TFTP. orcharhino Proxies assist you in scaling your orcharhino environment as the number of your managed systems increases.

orcharhino Proxies decrease the load on the central server, increase redundancy, and reduce bandwidth usage. For more information, see orcharhino Proxy Overview.

1.1. System Architecture

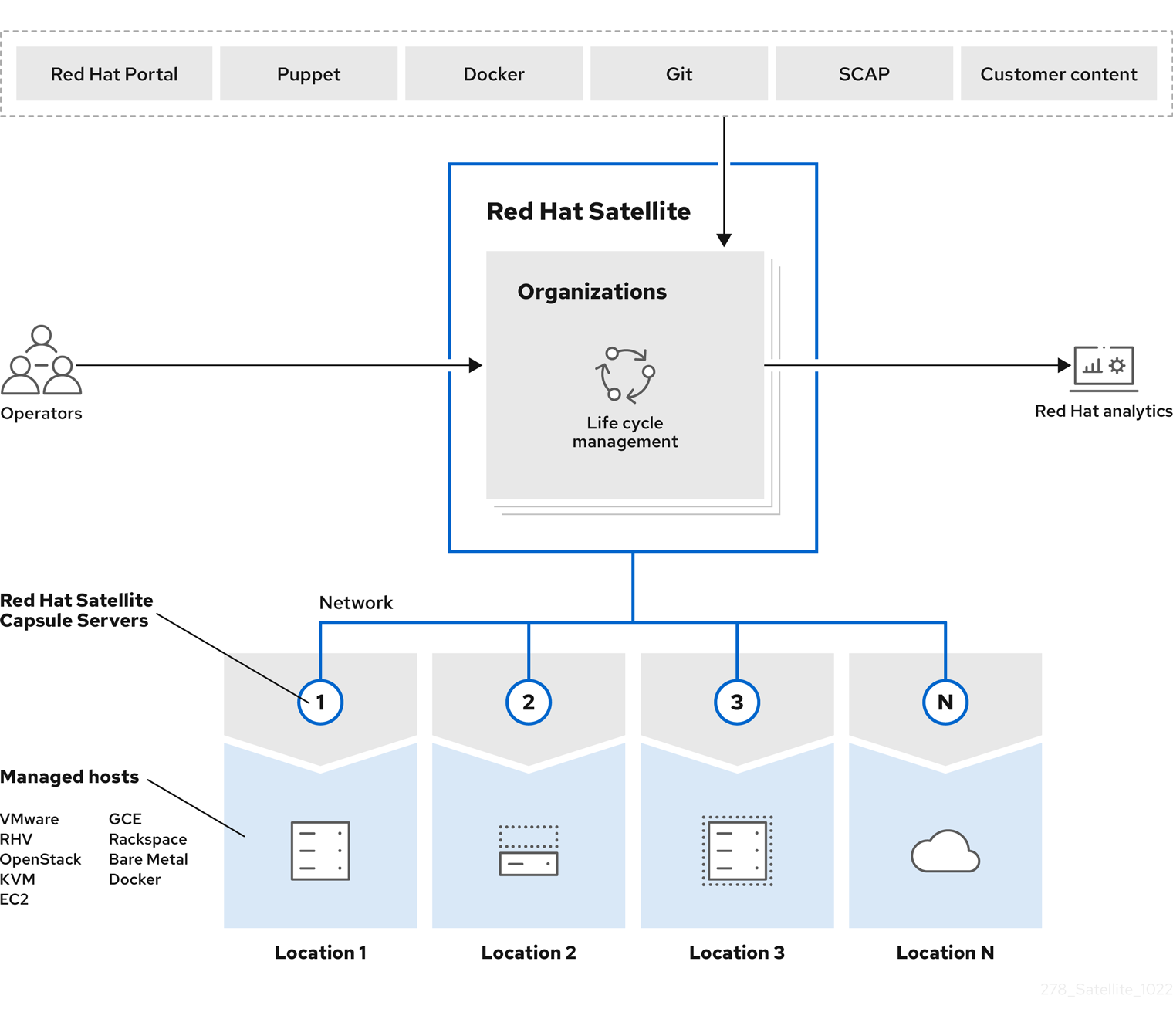

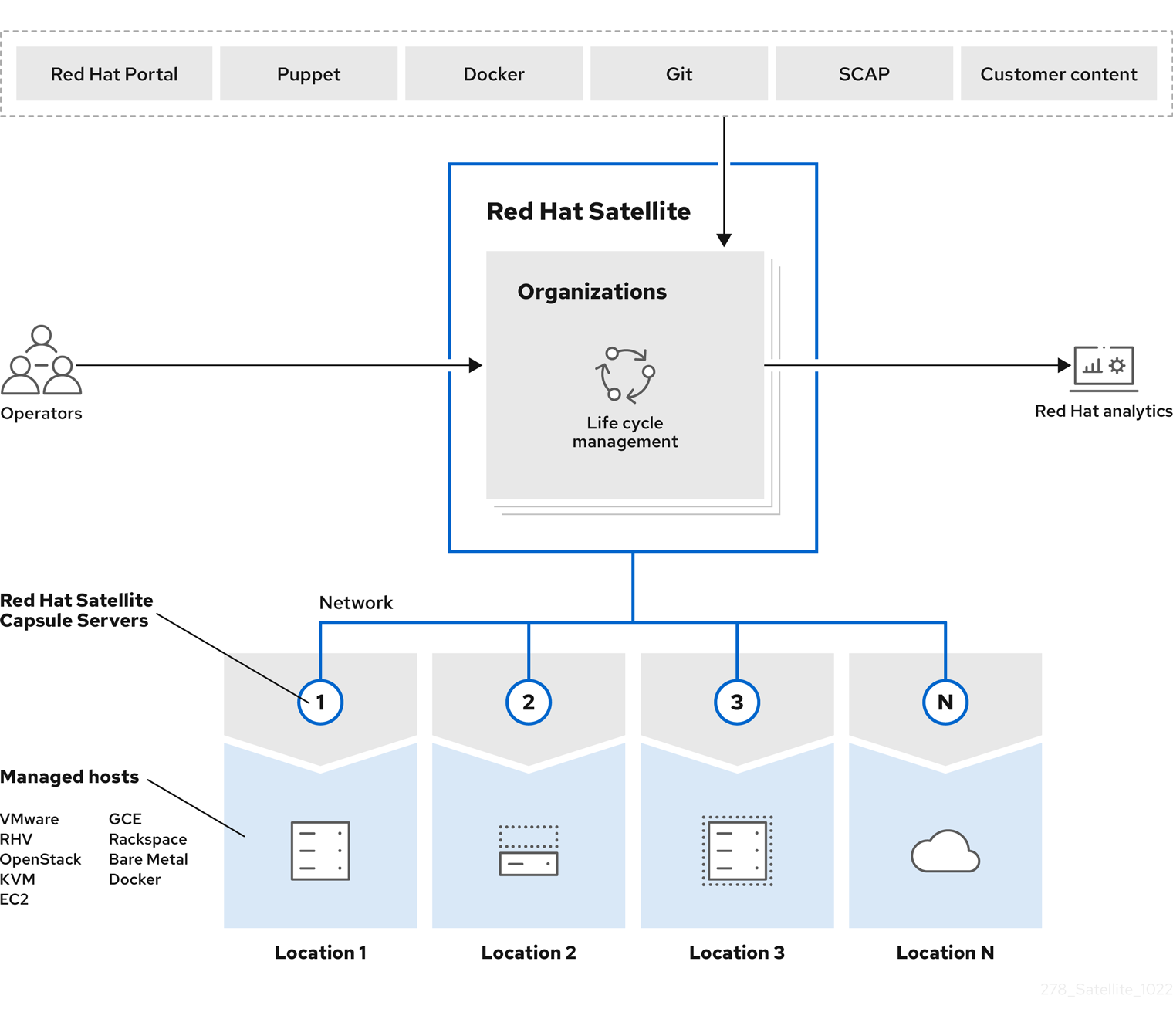

The following diagram represents the high-level architecture of orcharhino.

The graphics in this section are Red Hat illustrations. Non-Red Hat illustrations are welcome. If you want to contribute alternative images, raise a pull request in the Foreman Documentation GitHub page. Note that in Red Hat terminology, "Satellite" refers to Foreman and "Capsule" refers to Smart Proxy.

There are four stages through which content flows in this architecture:

- External Content Sources

-

The orcharhino Server can consume diverse types of content from various sources. The Red Hat Customer Portal is the primary source of software packages, errata, and container images. In addition, you can use other supported content sources (Git repositories, Docker Hub, SCAP repositories) as well as your organization’s internal data store.

- orcharhino Server

-

The orcharhino Server enables you to plan and manage the content life cycle and the configuration of orcharhino Proxies and hosts through GUI, CLI, or API.

orcharhino Server organizes the life cycle management by using organizations as principal division units. Organizations isolate content for groups of hosts with specific requirements and administration tasks. For example, the OS build team can use a different organization than the web development team.

orcharhino Server also contains a fine-grained authentication system to provide orcharhino operators with permissions to access precisely the parts of the infrastructure that lie in their area of responsibility.

- orcharhino Proxies

-

orcharhino Proxies mirror content from orcharhino Server to establish content sources in various geographical locations. This enables host systems to pull content and configuration from orcharhino Proxies in their location and not from the central orcharhino Server. The recommended minimum number of orcharhino Proxies is therefore given by the number of geographic regions where the organization that uses orcharhino operates.

Using Content Views, you can specify the exact subset of content that orcharhino Proxy makes available to hosts. See Content Life Cycle in orcharhino for a closer look at life cycle management with the use of Content Views.

The communication between managed hosts and orcharhino Server is routed through orcharhino Proxy that can also manage multiple services on behalf of hosts. Many of these services use dedicated network ports, but orcharhino Proxy ensures that a single source IP address is used for all communications from the host to orcharhino Server, which simplifies firewall administration. For more information on orcharhino Proxies see orcharhino Proxy Overview.

- Managed Hosts

-

Hosts are the recipients of content from orcharhino Proxies. Hosts can be either physical or virtual. orcharhino Server can have directly managed hosts. The base system running a orcharhino Proxy is also a managed host of orcharhino Server.

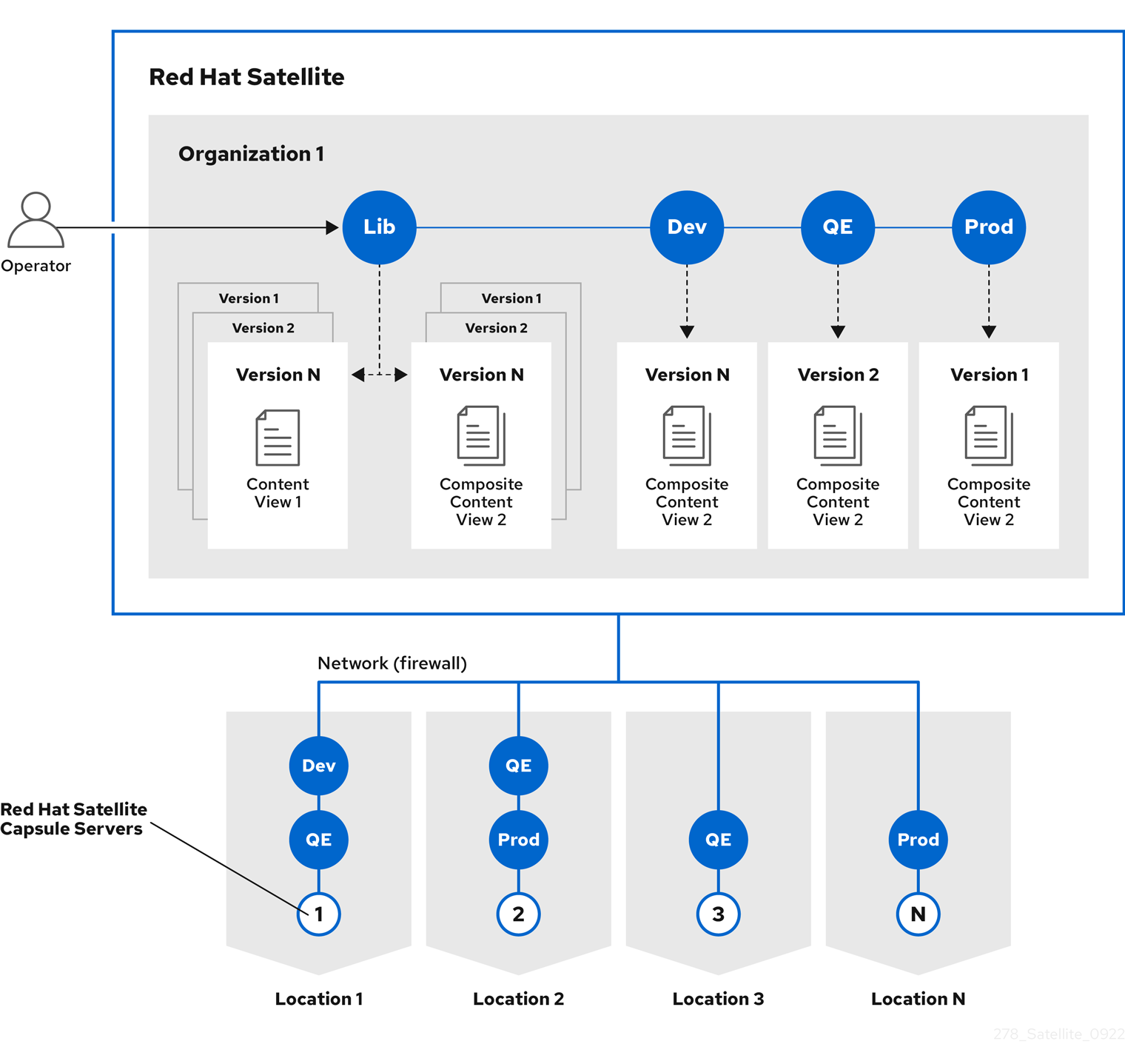

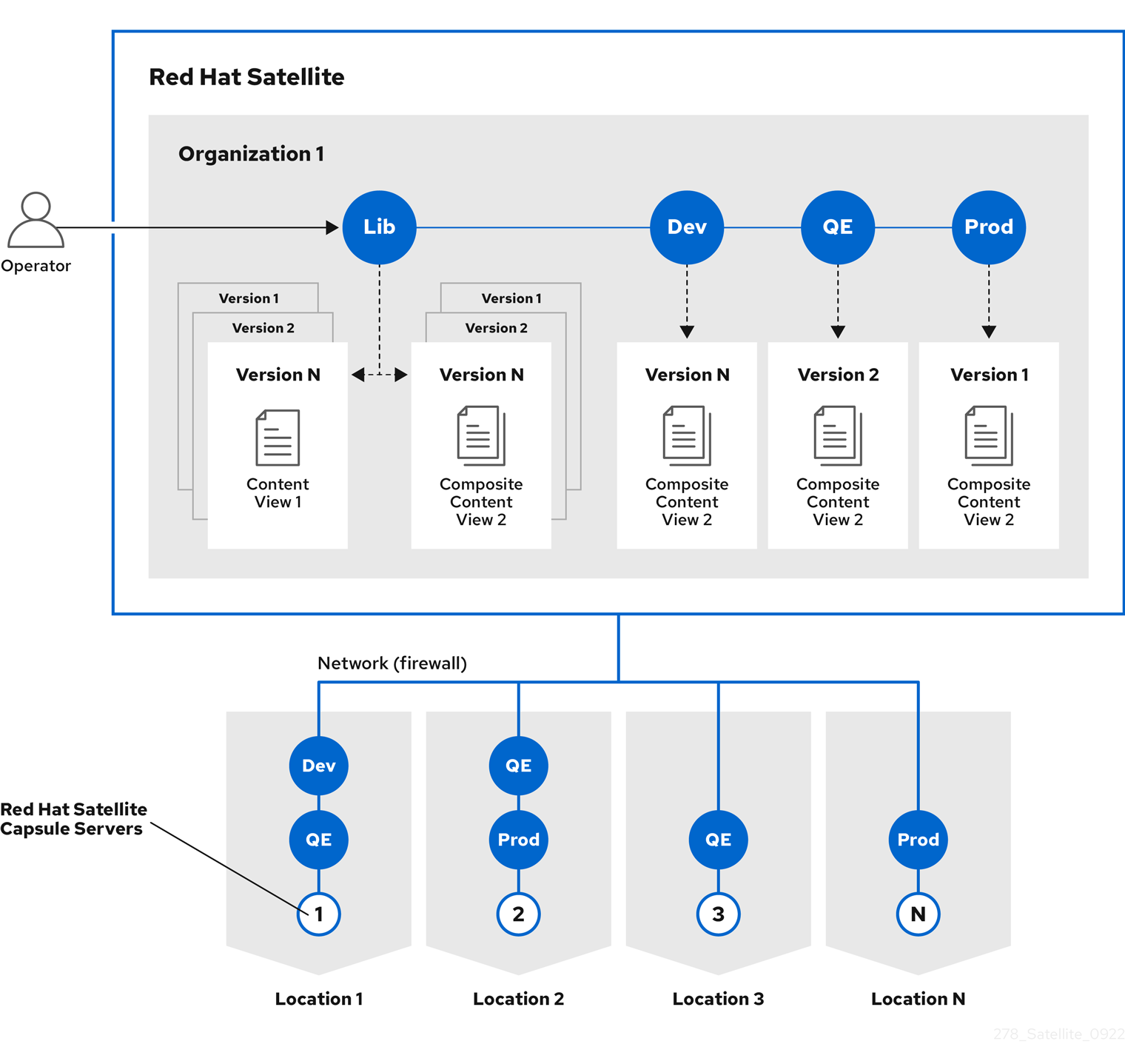

The following diagram provides a closer look at the distribution of content from orcharhino Server to orcharhino Proxies.

The graphics in this section are Red Hat illustrations. Non-Red Hat illustrations are welcome. If you want to contribute alternative images, raise a pull request in the Foreman Documentation GitHub page. Note that in Red Hat terminology, "Satellite" refers to Foreman and "Capsule" refers to Smart Proxy.

By default, each organization has a Library of content from external sources. Content Views are subsets of content from the Library created by intelligent filtering. You can publish and promote Content Views into life cycle environments (typically Dev, QA, and Production). When creating a orcharhino Proxy, you can choose which life cycle environments will be copied to that orcharhino Proxy and made available to managed hosts.

Content Views can be combined to create Composite Content Views. It can be beneficial to have a separate Content View for a repository of packages required by an operating system and a separate one for a repository of packages required by an application. One advantage is that any updates to packages in one repository only requires republishing the relevant Content View. You can then use Composite Content Views to combine published Content Views for ease of management.

Which Content Views should be promoted to which orcharhino Proxy depends on the orcharhino Proxy’s intended functionality. Any orcharhino Proxy can run DNS, DHCP, and TFTP as infrastructure services that can be supplemented, for example, with content or configuration services.

You can update orcharhino Proxy by creating a new version of a Content View using synchronized content from the Library. The new Content View version is then promoted through life cycle environments. You can also create in-place updates of Content Views. This means creating a minor version of the Content View in its current life cycle environment without promoting it from the Library. For example, if you need to apply a security erratum to a Content View used in Production, you can update the Content View directly without promoting to other life cycles. For more information on content management, see Managing Content.

1.2. System Components

orcharhino consists of the following open source projects:

- Foreman

-

Foreman is an open source application used for provisioning and life cycle management of physical and virtual systems. Foreman automatically configures these systems using various methods, including kickstart and Puppet modules. Foreman also provides historical data for reporting, auditing, and troubleshooting.

- Katello

-

Katello is an optional Foreman plug-in for subscription and repository management. It provides a means to subscribe to repositories and download content. You can create and manage different versions of this content and apply them to specific systems within user-defined stages of the application life cycle.

- Candlepin

-

Candlepin is a service within Katello that handles subscription management.

- Pulp

-

Pulp is a service within Katello that handles repository and content management. Pulp ensures efficient storage space by not duplicating RPM packages even when requested by Content Views in different organizations.

- Hammer

-

Hammer is a CLI tool that provides command line and shell equivalents of most orcharhino management UI functions.

- REST API

-

orcharhino includes a RESTful API service that allows system administrators and developers to write custom scripts and third-party applications that interface with orcharhino.

The terminology used in orcharhino and its components is extensive. For explanations of frequently used terms, see Glossary of Terms.

1.3. Supported Operating Systems and Architectures

1.3.1. orcharhino Server Operating System

orcharhino has packages for Enterprise Linux 8, Debian 11 and Ubuntu 20.04. Katello plug-in packages, which provide content management capabilities, are only available for Enterprise Linux.

The only architecture orcharhino has packages for is x86_64.

1.3.2. Client Operating Systems

orcharhino can help to manage any kind of operating systems that have clients orcharhino can integrate with. For example:

-

Operating system installers that can perform unattended installations (such as Anaconda in Fedora and CentOS, or Debian-installer in Debian and Ubuntu)

-

Puppet

-

Ansible

-

OpenSCAP

-

OpenSSH

-

Other clients where integration is provided by external plug-ins

orcharhino is actively tested with the following client operating systems:

-

CentOS 7 and 8

-

Debian stable

-

Ubuntu LTS

The Katello plug-in provides functionality for content and subscription management. The following utilities are provided for selected CentOS and SLES operating systems:

-

Katello agent

-

Subscription manager

-

Katello host tools

-

Tracer utility

For Red Hat family operating systems, SELinux must not be set to disabled mode.

2. orcharhino Proxy Overview

orcharhino Proxies provide content federation and run localized services to discover, provision, control, and configure hosts. You can use orcharhino Proxies to extend the orcharhino deployment to various geographical locations. This section contains an overview of features that can be enabled on orcharhino Proxies as well as their simple classification.

For more information about orcharhino Proxy requirements, installation process, and scalability considerations, see Installing orcharhino Proxy.

2.1. orcharhino Proxy Features

There are two sets of features provided by orcharhino Proxies. You can use orcharhino Proxy to run services required for host management. If you have the Katello plug-in installed, you can also configure orcharhino Proxy to mirror content from orcharhino Server.

Infrastructure and host management services:

-

DHCP – orcharhino Proxy can manage a DHCP server, including integration with an existing solution such as ISC DHCP servers, Active Directory, and Libvirt instances.

-

DNS – orcharhino Proxy can manage a DNS server, including integration with an existing solution such as ISC BIND and Active Directory.

-

TFTP – orcharhino Proxy can integrate with any UNIX-based TFTP server.

-

Realm – orcharhino Proxy can manage Kerberos realms or domains so that hosts can join them automatically during provisioning. orcharhino Proxy can integrate with an existing infrastructure, including FreeIPA and Active Directory.

-

Puppet server – orcharhino Proxy can act as a configuration management server by running Puppet server.

-

Puppet Certificate Authority – orcharhino Proxy can integrate with Puppet’s CA to provide certificates to hosts.

-

Baseboard Management Controller (BMC) – orcharhino Proxy can provide power management for hosts using IPMI or Redfish.

-

Provisioning template proxy – orcharhino Proxy can serve provisioning templates to hosts.

-

OpenSCAP – orcharhino Proxy can perform security compliance scans on hosts.

-

Remote Execution (REX) – orcharhino Proxy can run remote job execution on hosts.

Content related features, provided by the Katello plug-in:

-

Repository synchronization – the content from orcharhino Server (more precisely from selected life cycle environments) is pulled to orcharhino Proxy for content delivery (enabled by Pulp).

-

Content delivery – hosts configured to use orcharhino Proxy download content from that orcharhino Proxy rather than from the central orcharhino Server (enabled by Pulp).

-

Host action delivery – orcharhino Proxy executes scheduled actions on hosts.

-

Red Hat Subscription Management (RHSM) proxy – hosts are registered to their associated orcharhino Proxies rather than to the central orcharhino Server or the Red Hat Customer Portal (provided by Candlepin).

2.2. orcharhino Proxy Types

Not all orcharhino Proxy features have to be enabled at once. You can configure a orcharhino Proxy for a specific limited purpose. Some common configurations include:

-

Infrastructure orcharhino Proxies [DNS + DHCP + TFTP] – provide infrastructure services for hosts. With provisioning template proxy enabled, infrastructure orcharhino Proxy has all necessary services for provisioning new hosts.

-

Content orcharhino Proxies [Pulp] – provide content synchronized from orcharhino Server to hosts.

-

Configuration orcharhino Proxies [Pulp + Puppet + PuppetCA] – provide content and run configuration services for hosts.

-

All-in-one orcharhino Proxies [DNS + DHCP + TFTP + Pulp + Puppet + PuppetCA] – provide a full set of orcharhino Proxy features. All-in-one orcharhino Proxies enable host isolation by providing a single point of connection for managed hosts.

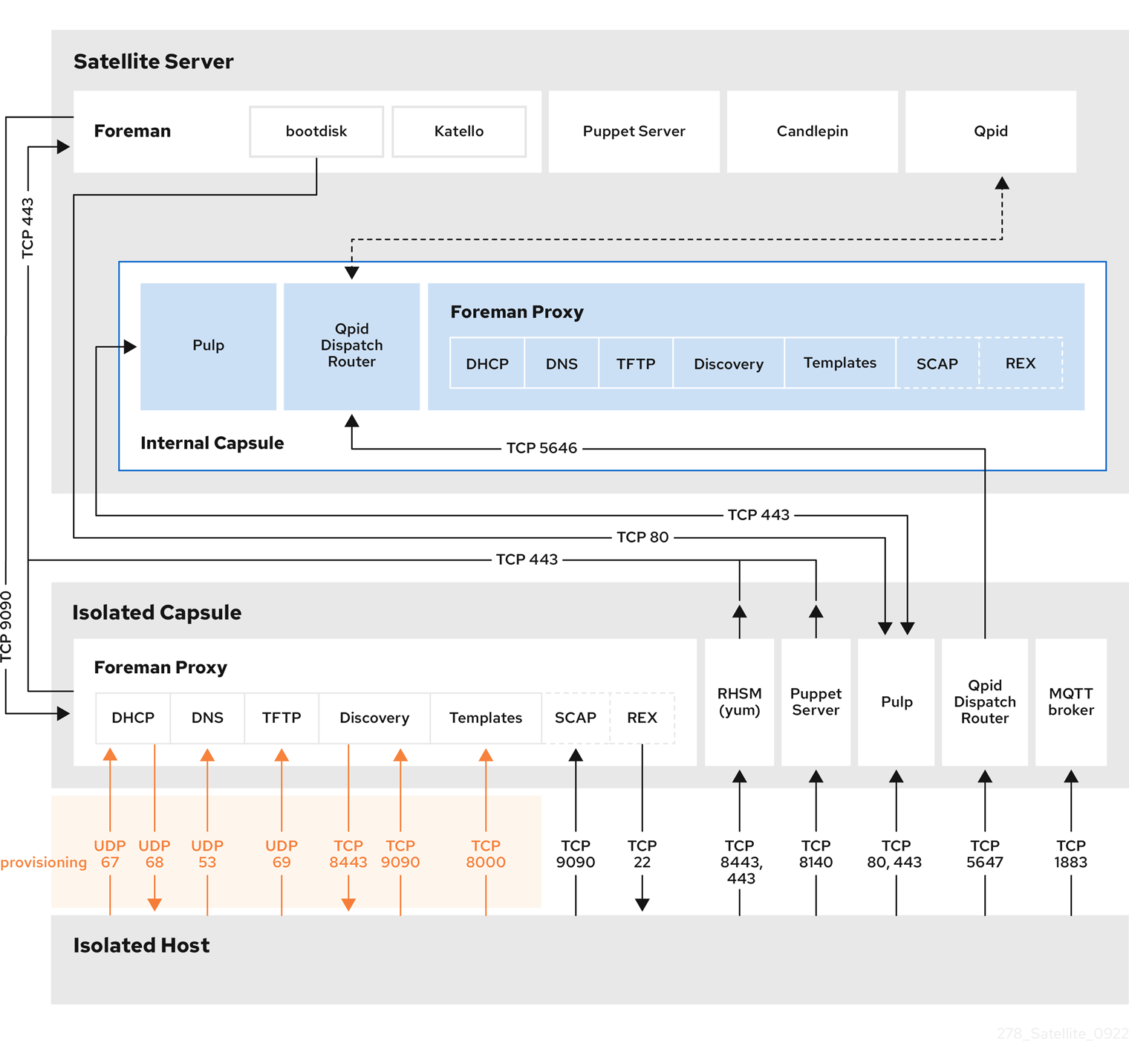

2.3. orcharhino Proxy Networking

The goal of orcharhino Proxy isolation is to provide a single endpoint for all of the host’s network communications so that in remote network segments, you need only open firewall ports to the orcharhino Proxy itself. The following diagram shows how the orcharhino components interact in the scenario with hosts connecting to an isolated orcharhino Proxy.

The graphics in this section are Red Hat illustrations. Non-Red Hat illustrations are welcome. If you want to contribute alternative images, raise a pull request in the Foreman Documentation GitHub page. Note that in Red Hat terminology, "Satellite" refers to Foreman and "Capsule" refers to Smart Proxy.

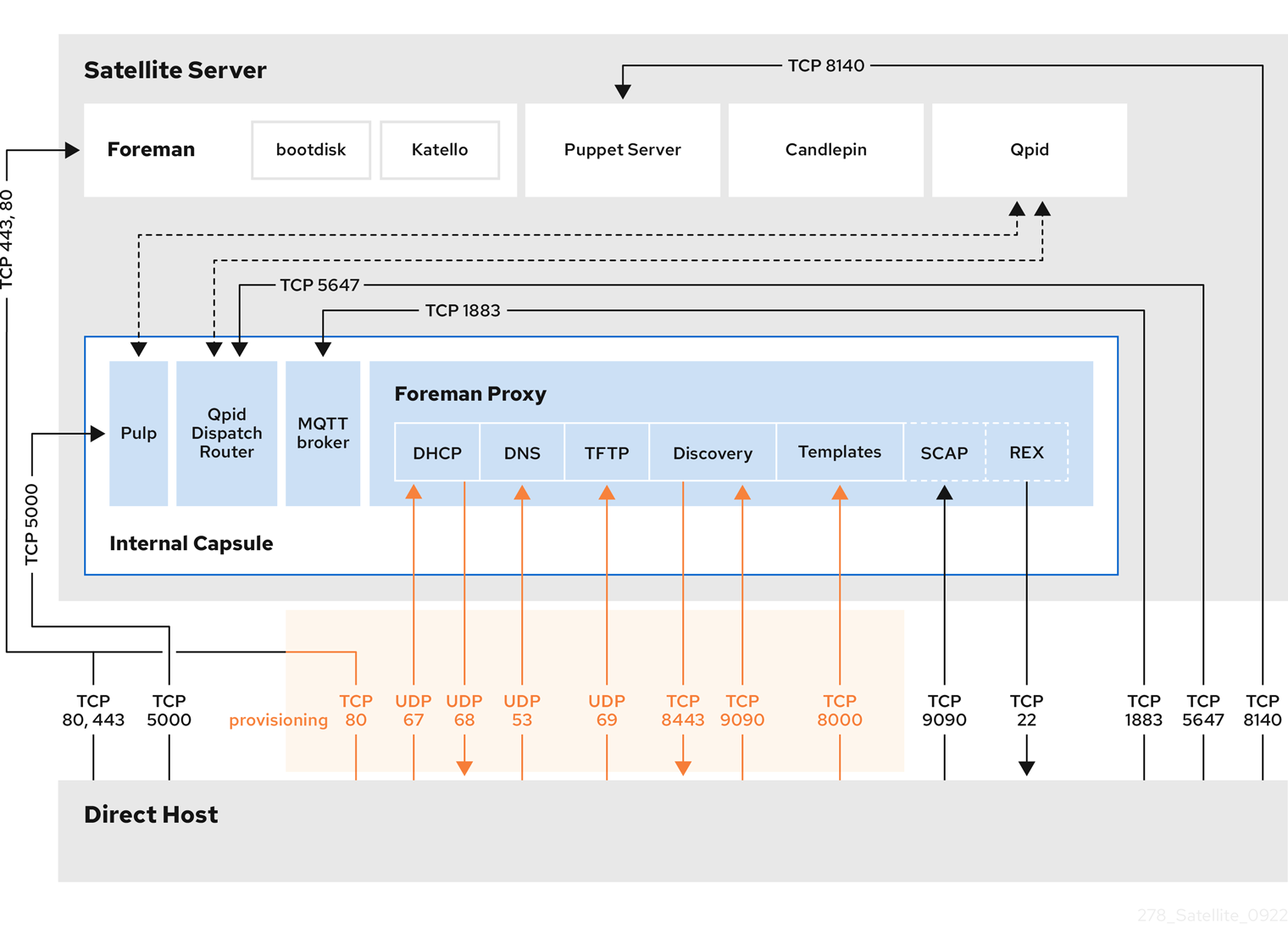

The following diagram shows how the orcharhino components interact when hosts connect directly to orcharhino Server. Note that as the base system of an external orcharhino Proxy is a Client of the orcharhino, this diagram is relevant even if you do not intend to have directly connected hosts.

You can find complete instructions for configuring the host-based firewall to open the required ports in the following documents:

-

Ports and Firewalls Requirements in Installing orcharhino Server

-

Ports and Firewalls Requirements in Installing orcharhino Proxy

3. Organizations, Locations, and Life Cycle Environments

orcharhino takes a consolidated approach to Organization and Location management. System administrators define multiple Organizations and multiple Locations in a single orcharhino Server. For example, a company might have three Organizations (Finance, Marketing, and Sales) across three countries (United States, United Kingdom, and Japan). In this example, orcharhino Server manages all Organizations across all geographical Locations, creating nine distinct contexts for managing systems. In addition, users can define specific locations and nest them to create a hierarchy. For example, orcharhino administrators might divide the United States into specific cities, such as Boston, Phoenix, or San Francisco.

The graphics in this section are Red Hat illustrations. Non-Red Hat illustrations are welcome. If you want to contribute alternative images, raise a pull request in the Foreman Documentation GitHub page. Note that in Red Hat terminology, "Satellite" refers to Foreman and "Capsule" refers to Smart Proxy.

orcharhino Server defines all locations and organizations. Each respective orcharhino orcharhino Proxy synchronizes content and handles configuration of systems in a different location.

The main orcharhino Server retains the management function, while the content and configuration is synchronized between the main orcharhino Server and a orcharhino orcharhino Proxy assigned to certain locations.

3.1. Organizations

Organizations divide orcharhino resources into logical groups based on ownership, purpose, content, security level, or other divisions. You can create and manage multiple organizations through orcharhino, then divide and assign your subscriptions to each individual organization. This provides a method of managing the content of several individual organizations under one management system.

3.2. Locations

Locations divide organizations into logical groups based on geographical location. Each location is created and used by a single account, although each account can manage multiple locations and organizations.

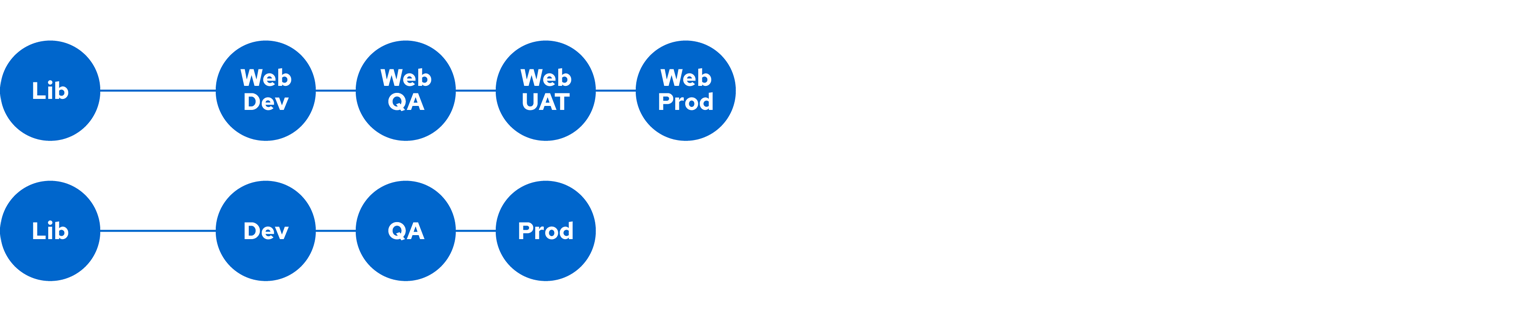

3.3. Life Cycle Environments

Application life cycles are divided into life cycle environments which represent each stage of the application life cycle. Life cycle environments are linked to form an environment path. You can promote content along the environment path to the next life cycle environment when required. For example, if development ends on a particular version of an application, you can promote this version to the testing environment and start development on the next version.

The graphics in this section are Red Hat illustrations. Non-Red Hat illustrations are welcome. If you want to contribute alternative images, raise a pull request in the Foreman Documentation GitHub page. Note that in Red Hat terminology, "Satellite" refers to Foreman and "Capsule" refers to Smart Proxy.

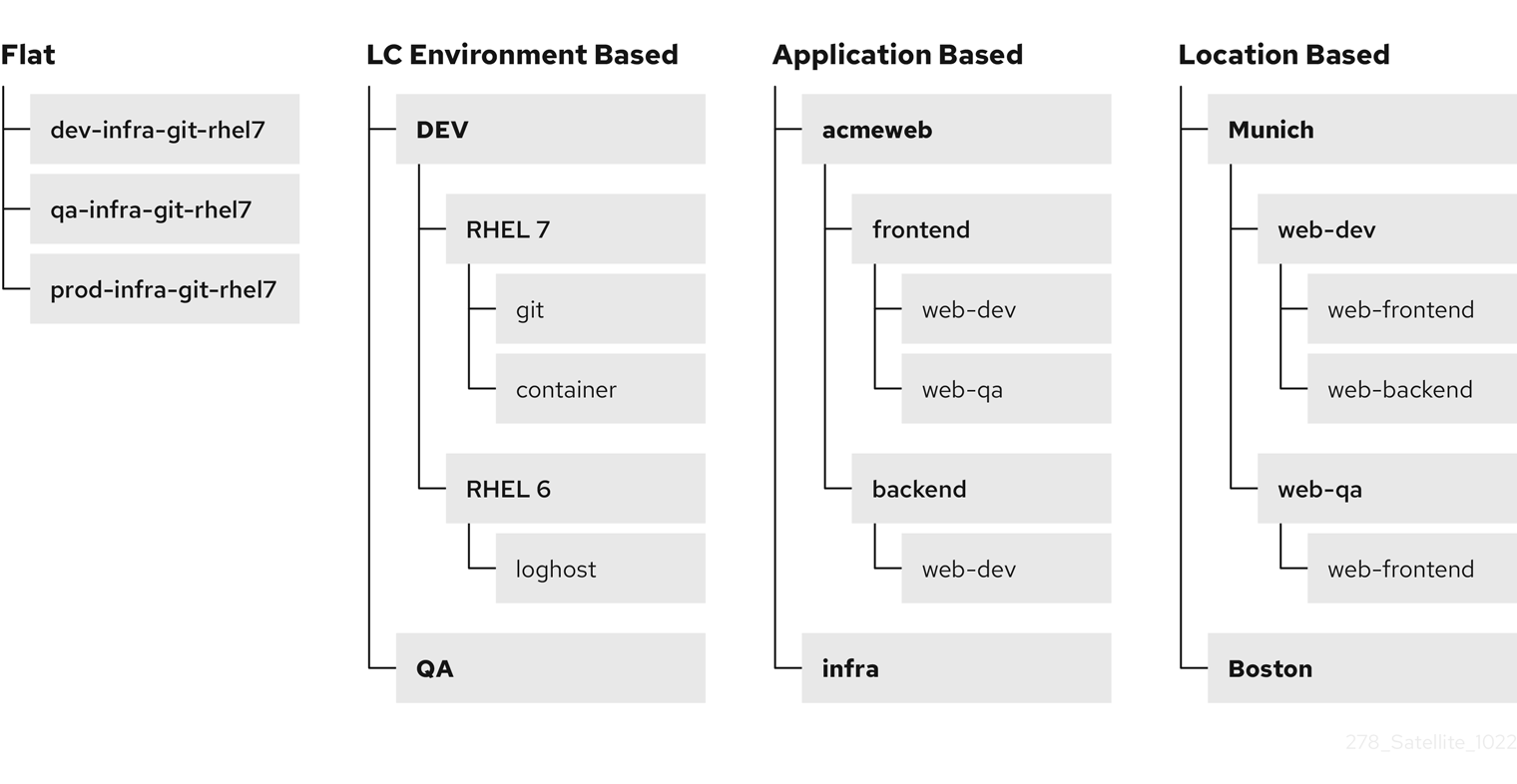

4. Host Grouping Concepts

Apart from the physical topology of orcharhino Proxies, orcharhino provides several logical units for grouping hosts. Hosts that are members of those groups inherit the group configuration. For example, the simple parameters that define the provisioning environment can be applied at the following levels:

Global > Organization > Location > Domain > Host group > Host

The main logical groups in orcharhino are:

-

Organizations – the highest level logical groups for hosts. Organizations provide a strong separation of content and configuration. Each organization requires a separate Red Hat Subscription Manifest, and can be thought of as a separate virtual instance of a orcharhino Server. Avoid the use of organizations if a lower level host grouping is applicable.

-

Locations – a grouping of hosts that should match the physical location. Locations can be used to map the network infrastructure to prevent incorrect host placement or configuration. For example, you cannot assign a subnet, domain, or compute resources directly to a orcharhino Proxy, only to a location.

-

Host groups – the main carriers of host definitions including assigned Puppet classes, Content View, or operating system. It is recommended to configure the majority of settings at the host group level instead of defining hosts directly. Configuring a new host then largely becomes a matter of adding it to the right host group. As host groups can be nested, you can create a structure that best fits your requirements (see Host Group Structures).

-

Host collections – a host registered to orcharhino Server for the purpose of subscription and content management is called content host. Content hosts can be organized into host collections, which enables performing bulk actions such as package management or errata installation.

Locations and host groups can be nested. Organizations and host collections are flat.

4.1. Host Group Structures

The fact that host groups can be nested to inherit parameters from each other allows for designing host group hierarchies that fit particular workflows. A well planned host group structure can help to simplify the maintenance of host settings. This section outlines four approaches to organizing host groups.

Flat Structure

The advantage of a flat structure is limited complexity, as inheritance is avoided. In a deployment with few host types, this scenario is the best option. However, without inheritance there is a risk of high duplication of settings between host groups.

Life Cycle Environment Based Structure

In this hierarchy, the first host group level is reserved for parameters specific to a life cycle environment. The second level contains operating system related definitions, and the third level contains application specific settings. Such structure is useful in scenarios where responsibilities are divided among life cycle environments (for example, a dedicated owner for the Development, QA, and Production life cycle stages).

Application Based Structure

This hierarchy is based on roles of hosts in a specific application. For example, it enables defining network settings for groups of back-end and front-end servers. The selected characteristics of hosts are segregated, which supports Puppet-focused management of complex configurations. However, the content views can only be assigned to host groups at the bottom level of this hierarchy.

Location Based Structure

In this hierarchy, the distribution of locations is aligned with the host group structure. In a scenario where the location (orcharhino Proxy) topology determines many other attributes, this approach is the best option. On the other hand, this structure complicates sharing parameters across locations, therefore in complex environments with a large number of applications, the number of host group changes required for each configuration change increases significantly.

5. Provisioning Concepts

An important feature of orcharhino is unattended provisioning of hosts. To achieve this, orcharhino uses DNS and DHCP infrastructures, PXE booting, TFTP, and Kickstart. Use this chapter to understand the working principle of these concepts.

5.1. PXE Booting

Preboot execution environment (PXE) provides the ability to boot a system over a network. Instead of using local hard drives or a CD-ROM, PXE uses DHCP to provide host with standard information about the network, to discover a TFTP server, and to download a boot image.

5.1.1. PXE Sequence

-

The host boots the PXE image if no other bootable image is found.

-

A NIC of the host sends a broadcast request to the DHCP server.

-

The DHCP server receives the request and sends standard information about the network: IP address, subnet mask, gateway, DNS, the location of a TFTP server, and a boot image.

-

The host obtains the boot loader

image/pxelinux.0and the configuration filepxelinux.cfg/00:MA:CA:AD:Dfrom the TFTP server. -

The host configuration specifies the location of a kernel image,

initrdand Kickstart. -

The host downloads the files and installs the image.

For an example of using PXE Booting by orcharhino Server, see Provisioning Workflow in Provisioning Hosts.

5.1.2. PXE Booting Requirements

To provision machines using PXE booting, ensure that you meet the following requirements:

-

Optional: If the host and the DHCP server are separated by a router, configure the DHCP relay agent and point to the DHCP server.

-

Ensure that all the network-based firewalls are configured to allow clients on the subnet to access the orcharhino Proxy. For more information, see orcharhino Topology with Isolated orcharhino Proxy.

-

Ensure that your client has access to the DHCP and TFTP servers.

-

Ensure that both orcharhino Server and orcharhino Proxy have DNS configured and are able to resolve provisioned host names.

-

Ensure that the UDP ports 67 and 68 are accessible by the client to enable the client to receive a DHCP offer with the boot options.

-

Ensure that the UDP port 69 is accessible by the client so that the client can access the TFTP server on the orcharhino Proxy.

-

Ensure that the TCP port 80 is accessible by the client to allow the client to download files and Kickstart templates from the orcharhino Proxy.

-

Ensure that the host provisioning interface subnet has a DHCP orcharhino Proxy set.

-

Ensure that the host provisioning interface subnet has a TFTP orcharhino Proxy set.

-

Ensure that the host provisioning interface subnet has a Templates orcharhino Proxy set.

-

Ensure that DHCP with the correct subnet is enabled using the orcharhino installer.

-

Enable TFTP using the orcharhino installer.

5.2. HTTP Booting

You can use HTTP booting to boot systems over a network using HTTP.

5.2.1. HTTP Booting Requirements with managed DHCP

To provision machines through HTTP booting ensure that you meet the following requirements:

For HTTP booting to work, ensure that your environment has the following client-side configurations:

-

All the network-based firewalls are configured to allow clients on the subnet to access the orcharhino Proxy. For more information, see orcharhino Topology with Isolated orcharhino Proxy.

-

Your client has access to the DHCP and DNS servers.

-

Your client has access to the HTTP UEFI Boot orcharhino Proxy.

-

Optional: If the host and the DHCP server are separated by a router, configure the DHCP relay agent and point to the DHCP server.

Although TFTP protocol is not used for HTTP UEFI Booting, orcharhino uses TFTP orcharhino Proxy API to deploy bootloader configuration.

For HTTP booting to work, ensure that orcharhino has the following configurations:

-

Both orcharhino Server and orcharhino Proxy have DNS configured and are able to resolve provisioned host names.

-

The UDP ports 67 and 68 are accessible by the client so that the client can send and receive a DHCP request and offer.

-

Ensure that the TCP port 8000 is open for the client to download the bootloader and Kickstart templates from the orcharhino Proxy.

-

The TCP port 8443 is open for the client to download the bootloader from the orcharhino Proxy using the HTTPS protocol.

-

The subnet that functions as the host’s provisioning interface has a DHCP orcharhino Proxy, a HTTP Boot orcharhino Proxy, a TFTP orcharhino Proxy, and a Templates orcharhino Proxy

-

The

grub2-efipackage is updated to the latest version. To update thegrub2-efipackage to the latest version and execute the installer to copy the recent bootloader from/bootinto/var/lib/tftpbootdirectory, enter the following commands:# dnf update grub2-efi # foreman-installer

5.2.2. HTTP Booting Requirements with unmanaged DHCP

To provision machines through HTTP booting without managed DHCP ensure that you meet the following requirements:

-

HTTP UEFI Boot URL must be set to one of:

-

http://orcharhino-proxy.example.com:8000 -

https://orcharhino-proxy.example.com:8443

-

-

Ensure that your client has access to the DHCP and DNS servers.

-

Ensure that your client has access to the HTTP UEFI Boot orcharhino Proxy.

-

Ensure that all the network-based firewalls are configured to allow clients on the subnet to access the orcharhino Proxy. For more information, see orcharhino Topology with Isolated orcharhino Proxy.

-

An unmanaged DHCP server available for clients.

-

An unmanaged DNS server available for clients. In case DNS is not available, use IP address to configure clients.

Although TFTP protocol is not used for HTTP UEFI Booting, orcharhino use TFTP orcharhino Proxy API to deploy bootloader configuration.

-

Ensure that both orcharhino Server and orcharhino Proxy have DNS configured and are able to resolve provisioned host names.

-

Ensure that the UDP ports 67 and 68 are accessible by the client so that the client can send and receive a DHCP request and offer.

-

Ensure that the TCP port 8000 is open for the client to download bootloader and Kickstart templates from the orcharhino Proxy.

-

Ensure that the TCP port 8443 is open for the client to download the bootloader from the orcharhino Proxy via HTTPS protocol.

-

Ensure that the host provisioning interface subnet has a HTTP Boot orcharhino Proxy set.

-

Ensure that the host provisioning interface subnet has a TFTP orcharhino Proxy set.

-

Ensure that the host provisioning interface subnet has a Templates orcharhino Proxy set.

-

Update the

grub2-efipackage to the latest version and execute the installer to copy the recent bootloader from the/bootdirectory into the/var/lib/tftpbootdirectory:# dnf update grub2-efi # foreman-installer

5.3. Secure Boot

When orcharhino is installed on Enterprise Linux using foreman-installer, grub2 and shim bootloaders that are signed by Red Hat are deployed into the TFTP and HTTP UEFI Boot directory.

PXE loader options named "SecureBoot" configure hosts to load shim.efi.

On Debian and Ubuntu operating systems, the grub2 bootloader is created using the grub2-mkimage unsigned.

To perform the Secure Boot, the bootloader must be manually signed and key enrolled into the EFI firmware.

Alternatively, grub2 from Ubuntu or Enterprise Linux can be copied to perform booting.

Grub2 in Enterprise Linux 8.0-8.3 were updated to mitigate Boot Hole Vulnerability and keys of existing Enterprise Linux kernels were invalidated. To boot any of the affected Enterprise Linux kernel (or OS installer), you must enroll keys manually into the EFI firmware for each host:

+

# pesign -P -h -i /boot/vmlinuz-<version> # mokutil --import-hash <hash value returned from pesign> # reboot

5.4. Kickstart

You can use Kickstart to automate the installation process of a orcharhino or orcharhino Proxy by creating a Kickstart file that contains all the information that is required for the installation. For more information about Kickstart, see Performing an automated installation using Kickstart in Performing an advanced RHEL 8 installation.

5.4.1. Workflow

When you run a orcharhino Kickstart script, the following workflow occurs:

-

It specifies the installation location of a orcharhino Server or a orcharhino Proxy.

-

It installs the predefined packages.

-

It installs Subscription Manager.

-

It uses Activation Keys to subscribe the hosts to orcharhino.

-

It installs Puppet, and configures a

puppet.conffile to indicate the orcharhino or orcharhino Proxy instance. -

It enables Puppet to run and request a certificate.

-

It runs user defined snippets.

orcharhino Deployment Planning

6. Deployment Considerations

This section provides an overview of general topics to be considered when planning a orcharhino deployment together with recommendations and references to more specific documentation.

6.1. orcharhino Server with External Database

When you install orcharhino, the foreman-installer command creates databases on the same server that you install orcharhino.

Depending on your requirements, moving to external databases can provide increased working memory for orcharhino, which can improve response times for database operating requests.

Moving to external databases distributes the workload and can increase the capacity for performance tuning.

Consider using external databases if you plan to use your orcharhino deployment for the following scenarios:

-

Frequent remote execution tasks. This creates a high volume of records in PostgreSQL and generates heavy database workloads.

-

High disk I/O workloads from frequent repository synchronization or Content View publishing. This causes orcharhino to create a record in PostgreSQL for each job.

-

High volume of hosts.

-

High volume of synced content.

For more information about using an external database, see Using External Databases with orcharhino in Installing orcharhino Server.

6.2. Locations and Topology

This section outlines general considerations that should help you to specify your orcharhino deployment scenario. The most common deployment scenarios are listed in Common Deployment Scenarios. The defining questions are:

-

How many orcharhino Proxies do I need? – The number of geographic locations where your organization operates should translate to the number of orcharhino Proxies. By assigning a orcharhino Proxy to each location, you decrease the load on orcharhino Server, increase redundancy, and reduce bandwidth usage. orcharhino Server itself can act as a orcharhino Proxy (it contains an integrated orcharhino Proxy by default). This can be used in single location deployments and to provision the base system’s of orcharhino Proxies. Using the integrated orcharhino Proxy to communicate with hosts in remote locations is not recommended as it can lead to suboptimal network utilization.

-

What services will be provided by orcharhino Proxies? – After establishing the number of orcharhino Proxies, decide what services will be enabled on each orcharhino Proxy. Even though the whole stack of content and configuration management capabilities is available, some infrastructure services (DNS, DHCP, TFTP) can be outside of a orcharhino administrator’s control. In such case, orcharhino Proxies have to integrate with those external services (see orcharhino Proxy with External Services).

-

What compute resources do I need for my hosts? – Apart from provisioning bare metal hosts, you can use various compute resources supported by orcharhino. To learn about provisioning on different compute resources see Provisioning Hosts.

6.3. Content Sources

The Red Hat Subscription Manifest determines what Red Hat repositories are accessible from your orcharhino Server. Once you enable a Red Hat repository, an associated orcharhino Product is created automatically. For distributing content from custom sources you need to create products and repositories manually. Red Hat repositories are signed with GPG keys by default, and it is recommended to create GPG keys also for your custom repositories. The configuration of custom repositories depends on the type of content they hold (RPM packages, or Docker images).

Repositories configured as yum repositories, that contain only RPM packages, can make use of the new download policy setting to save on synchronization time and storage space.

This setting enables selecting from Immediate and On demand.

The On demand setting saves space and time by only downloading packages when requested by clients.

For detailed instructions on setting up content sources see Importing Content in Managing Content.

A custom repository within orcharhino Server is in most cases populated with content from an external staging server. Such servers lie outside of the orcharhino infrastructure, however, it is recommended to use a revision control system (such as Git) on these servers to have better control over the custom content.

6.4. Content Life Cycle

orcharhino provides features for precise management of the content life cycle. A life cycle environment represents a stage in the content life cycle, a Content View is a filtered set of content, and can be considered as a defined subset of content. By associating Content Views with life cycle environments, you make content available to hosts in a defined way. See Content Life Cycle in orcharhino for visualization of the process. For a detailed overview of the content management process see Importing Custom Content in Managing Content. The following section provides general scenarios for deploying content views as well as life cycle environments.

The default life cycle environment called Library gathers content from all connected sources. It is not recommended to associate hosts directly with the Library as it prevents any testing of content before making it available to hosts. Instead, create a life cycle environment path that suits your content workflow. The following scenarios are common:

-

A single life cycle environment – content from Library is promoted directly to the production stage. This approach limits the complexity but still allows for testing the content within the Library before making it available to hosts.

-

A single life cycle environment path – both operating system and applications content is promoted through the same path. The path can consist of several stages (for example Development, QA, Production), which enables thorough testing but requires additional effort.

-

Application specific life cycle environment paths – each application has a separate path, which allows for individual application release cycles. You can associate specific compute resources with application life cycle stages to facilitate testing. On the other hand, this scenario increases the maintenance complexity.

The following content view scenarios are common:

-

All in one content view – a content view that contains all necessary content for the majority of your hosts. Reducing the number of content views is an advantage in deployments with constrained resources (time, storage space) or with uniform host types. However, this scenario limits the content view capabilities such as time based snapshots or intelligent filtering. Any change in content sources affects a proportion of hosts.

-

Host specific content view – a dedicated content view for each host type. This approach can be useful in deployments with a small number of host types (up to 30). However, it prevents sharing content across host types as well as separation based on criteria other than the host type (for example between operating system and applications). With critical updates every content view has to be updated, which increases maintenance efforts.

-

Host specific composite content view – a dedicated combination of content views for each host type. This approach enables separating host specific and shared content, for example you can have dedicated content views for the operating system and application content. By using a composite, you can manage your operating system and applications separately and at different frequencies.

-

Component based content view – a dedicated content view for a specific application. For example a database content view can be included into several composite content views. This approach allows for greater standardization but it leads to an increased number of content views.

The optimal solution depends on the nature of your host environment. Avoid creating a large number of content views, but keep in mind that the size of a content view affects the speed of related operations (publishing, promoting). Also make sure that when creating a subset of packages for the content view, all dependencies are included as well. Note that kickstart repositories should not be added to content views, as they are used for host provisioning only.

6.5. Content Deployment

Content deployment is the management of errata and packages on content hosts. There are two methods for content deployment on orcharhino. The default method is remote execution, and the second method is the deprecated Katello agent.

-

Remote execution – Remote execution allows installation, update, or removal of packages, the bootstrap of configuration management agents, and the trigger of Puppet runs. You can configure orcharhino to perform remote execution either over SSH (push based) or over MQTT/HTTPS (pull based). While remote execution is enabled on orcharhino Server by default, it is disabled on orcharhino Proxies and content hosts. You must enable it manually.

This is the preferred method for content deployment.

-

Katello agent – Katello agent installs and updates packages. It uses the goferd service, which communicates to and from the orcharhino Server. It is enabled and started automatically on content hosts after a successful installation of the

katello-agentpackage.Note that the Katello agent is deprecated and will be removed in a future orcharhino release.

6.6. Provisioning

orcharhino provides several features to help you automate the host provisioning, including provisioning templates, configuration management with Puppet, and host groups for standardized provisioning of host roles. For a description of the provisioning workflow see Provisioning Workflow in Provisioning Hosts. The same guide contains instructions for provisioning on various compute resources.

6.7. Role Based Authentication

Assigning a role to a user enables controlling access to orcharhino components based on a set of permissions. You can think of role based authentication as a way of hiding unnecessary objects from users who are not supposed to interact with them.

There are various criteria for distinguishing among different roles within an organization. Apart from the administrator role, the following types are common:

-

Roles related to applications or parts of infrastructure – for example, roles for owners of Red Hat Enterprise Linux as the operating system versus owners of application servers and database servers.

-

Roles related to a particular stage of the software life cycle – for example, roles divided among the development, testing, and production phases, where each phase has one or more owners.

-

Roles related to specific tasks – such as security manager or license manager.

When defining a custom role, consider the following recommendations:

-

Define the expected tasks and responsibilities – define the subset of the orcharhino infrastructure that will be accessible to the role as well as actions permitted on this subset. Think of the responsibilities of the role and how it would differ from other roles.

-

Use predefined roles whenever possible – orcharhino provides a number of sample roles that can be used alone or as part of a role combination. Copying and editing an existing role can be a good start for creating a custom role.

-

Consider all affected entities – for example, a content view promotion automatically creates new Puppet Environments for the particular life cycle environment and content view combination. Therefore, if a role is expected to promote content views, it also needs permissions to create and edit Puppet Environments.

-

Consider areas of interest – even though a role has a limited area of responsibility, there might be a wider area of interest. Therefore, you can grant the role a read only access to parts of orcharhino infrastructure that influence its area of responsibility. This allows users to get earlier access to information about potential upcoming changes.

-

Add permissions step by step – test your custom role to make sure it works as intended. A good approach in case of problems is to start with a limited set of permissions, add permissions step by step, and test continuously.

For instructions on defining roles and assigning them to users, see Managing Users and Roles in Administering orcharhino. The same guide contains information on configuring external authentication sources.

6.8. Additional Tasks

This section provides a short overview of selected orcharhino capabilities that can be used for automating certain tasks or extending the core usage of orcharhino:

-

Discovering bare metal hosts – the orcharhino Discovery plug-in enables automatic bare-metal discovery of unknown hosts on the provisioning network. These new hosts register themselves to orcharhino Server and the Puppet Agent on the client uploads system facts collected by Facter, such as serial ID, network interface, memory, and disk information. After registration you can initialize provisioning of those discovered hosts. For more information, see Creating Hosts from Discovered Hosts in Provisioning Hosts.

-

Backup management – backup and disaster recovery instructions, see Backing Up orcharhino Server and orcharhino Proxy in Administering orcharhino. Using remote execution, you can also configure recurring backup tasks on managed hosts. For more information on remote execution see Configuring and Setting up Remote Jobs in Managing Hosts.

-

Security management – orcharhino supports security management in various ways, including update and errata management, OpenSCAP integration for system verification, update and security compliance reporting, and fine grained role based authentication. Find more information on errata management and OpenSCAP concepts in Managing Hosts.

-

Incident management – orcharhino supports the incident management process by providing a centralized overview of all systems including reporting and email notifications. Detailed information on each host is accessible from orcharhino Server, including the event history of recent changes.

-

Scripting with Hammer and API – orcharhino provides a command line tool called Hammer that provides a CLI equivalent to the majority of web UI procedures. In addition, you can use the access to the orcharhino API to write automation scripts in a selected programming language.

7. Common Deployment Scenarios

This section provides a brief overview of common deployment scenarios for orcharhino. Note that many variations and combinations of the following layouts are possible.

7.1. Single Location

An integrated orcharhino Proxy is a virtual orcharhino Proxy that is created by default in orcharhino Server during the installation process. This means orcharhino Server can be used to provision directly connected hosts for orcharhino deployment in a single geographical location, therefore only one physical server is needed. The base systems of isolated orcharhino Proxies can be directly managed by orcharhino Server, however it is not recommended to use this layout to manage other hosts in remote locations.

7.2. Single Location with Segregated Subnets

Your infrastructure might require multiple isolated subnets even if orcharhino is deployed in a single geographic location. This can be achieved for example by deploying multiple orcharhino Proxies with DHCP and DNS services, but the recommended way is to create segregated subnets using a single orcharhino Proxy. This orcharhino Proxy is then used to manage hosts and compute resources in those segregated networks to ensure they only have to access the orcharhino Proxy for provisioning, configuration, errata, and general management. For more information on configuring subnets see Managing Hosts.

7.3. Multiple Locations

It is recommended to create at least one orcharhino Proxy per geographic location. This practice can save bandwidth since hosts obtain content from a local orcharhino Proxy. Synchronization of content from remote repositories is done only by the orcharhino Proxy, not by each host in a location. In addition, this layout makes the provisioning infrastructure more reliable and easier to configure. See orcharhino System Architecture for an illustration of this approach.

7.4. orcharhino Proxy with External Services

You can configure a orcharhino Proxy (integrated or standalone) to use external DNS, DHCP, or TFTP service. If you already have a server that provides these services in your environment, you can integrate it with your orcharhino deployment. For information about how to configure a orcharhino Proxy with external services, see Configuring orcharhino Proxy with External Services in Installing orcharhino Proxy.

Appendix A: Technical Users Provided and Required by orcharhino

During the installation of orcharhino, system accounts are created. They are used to manage files and process ownership of the components integrated into orcharhino. Some of these accounts have fixed UIDs and GIDs, while others take the next available UID and GID on the system instead. To control the UIDs and GIDs assigned to accounts, you can define accounts before installing orcharhino. Because some of the accounts have hard-coded UIDs and GIDs, it is not possible to do this with all accounts created during orcharhino installation.

The following table lists all the accounts created by orcharhino during installation. You can predefine accounts that have Yes in the Flexible UID and GID column with custom UID and GID before installing orcharhino.

Do not change the home and shell directories of system accounts because they are requirements for orcharhino to work correctly.

Because of potential conflicts with local users that orcharhino creates, you cannot use external identity providers for the system users of the orcharhino base operating system.

| User name | UID | Group name | GID | Flexible UID and GID | Home | Shell |

|---|---|---|---|---|---|---|

foreman |

N/A |

foreman |

N/A |

yes |

/usr/share/foreman |

/sbin/nologin |

foreman-proxy |

N/A |

foreman-proxy |

N/A |

yes |

/usr/share/foreman-proxy |

/sbin/nologin |

apache |

48 |

apache |

48 |

no |

/usr/share/httpd |

/sbin/nologin |

postgres |

26 |

postgres |

26 |

no |

/var/lib/pgsql |

/bin/bash |

pulp |

N/A |

pulp |

N/A |

no |

N/A |

/sbin/nologin |

puppet |

52 |

puppet |

52 |

no |

/opt/puppetlabs/server/data/puppetserver |

/sbin/nologin |

qdrouterd |

N/A |

qdrouterd |

N/A |

yes |

/var/lib/qdrouterd |

/sbin/nologin |

qpidd |

N/A |

qpidd |

N/A |

yes |

/var/lib/qpidd |

/sbin/nologin |

saslauth |

N/A |

saslauth |

76 |

no |

/run/saslauthd |

/sbin/nologin |

tomcat |

53 |

tomcat |

53 |

no |

/usr/share/tomcat |

/bin/nologin |

unbound |

N/A |

unbound |

N/A |

yes |

/etc/unbound |

/sbin/nologin |

Appendix B: Glossary of Terms

This glossary documents various terms used in relation to orcharhino.

- Activation Key

-

A token for host registration and subscription attachment. Activation keys define subscriptions, products, content views, and other parameters to be associated with a newly created host.

- Answer File

-

A configuration file that defines settings for an installation scenario. Answer files are defined in the YAML format and stored in the /etc/foreman-installer/scenarios.d/ directory.

- ARF Report

-

The result of an OpenSCAP audit. Summarizes the security compliance of hosts managed by orcharhino.

- Audits

-

Provide a report on changes made by a specific user. Audits can be viewed in the orcharhino management UI under Monitor > Audits.

- Baseboard Management Controller (BMC)

-

Enables remote power management of bare-metal hosts. In orcharhino, you can create a BMC interface to manage selected hosts.

- Boot Disk

-

An ISO image used for PXE-less provisioning. This ISO enables the host to connect to orcharhino Server, boot the installation media, and install the operating system. There are several kinds of boot disks: host image, full host image, generic image, and subnet image.

- orcharhino Proxy (orcharhino Proxy)

-

An additional server that can be used in a orcharhino deployment to facilitate content federation and distribution (act as a Pulp mirror), and to run other localized services (Puppet server, DHCP, DNS, TFTP, and more). orcharhino Proxies are useful for orcharhino deployment across various geographical locations.

- Catalog

-

A document that describes the desired system state for one specific host managed by Puppet. It lists all of the resources that need to be managed, as well as any dependencies between those resources. Catalogs are compiled by a Puppet server from Puppet Manifests and data from Puppet Agents.

- Candlepin

-

A service within Katello responsible for subscription management.

- Compliance Policy

-

Refers to a scheduled task executed on orcharhino Server that checks the specified hosts for compliance against SCAP content.

- Compute Profile

-

Specifies default attributes for new virtual machines on a compute resource.

- Compute Resource

-

A virtual or cloud infrastructure, which orcharhino uses for deployment of hosts and systems. Examples include oVirt, OpenStack, EC2, and VMWare.

- Container (Docker Container)

-

An isolated application sandbox that contains all runtime dependencies required by an application. orcharhino supports container provisioning on a dedicated compute resource.

- Container Image

-

A static snapshot of the container’s configuration. orcharhino supports various methods of importing container images as well as distributing images to hosts through content views.

- Content

-

A general term for everything orcharhino distributes to hosts. Includes software packages (RPM files), or Docker images. Content is synchronized into the Library and then promoted into life cycle environments using content views so that they can be consumed by hosts.

- Content Delivery Network (CDN)

-

The mechanism used to deliver Red Hat content to orcharhino Server.

- Content Host

-

The part of a host that manages tasks related to content and subscriptions.

- Content View

-

A subset of Library content created by intelligent filtering. Once a content view is published, it can be promoted through the life cycle environment path, or modified using incremental upgrades.

- Discovered Host

-

A bare-metal host detected on the provisioning network by the Discovery plug-in.

- Discovery Image

-

Refers to the minimal operating system based on Enterprise Linux that is PXE-booted on hosts to acquire initial hardware information and to communicate with orcharhino Server before starting the provisioning process.

- Discovery Plug-in

-

Enables automatic bare-metal discovery of unknown hosts on the provisioning network. The plug-in consists of three components: services running on orcharhino Server and orcharhino Proxy, and the Discovery image running on host.

- Discovery Rule

-

A set of predefined provisioning rules which assigns a host group to discovered hosts and triggers provisioning automatically.

- Docker Tag

-

A mark used to differentiate container images, typically by the version of the application stored in the image. In the orcharhino management UI, you can filter images by tag under Content > Docker Tags.

- ERB

-

Embedded Ruby (ERB) is a template syntax used in provisioning and job templates.

- Errata

-

Updated RPM packages containing security fixes, bug fixes, and enhancements. In relationship to a host, erratum is applicable if it updates a package installed on the host and installable if it is present in the host’s content view (which means it is accessible for installation on the host).

- External Node Classifier

-

A construct that provides additional data for a server to use when configuring hosts. orcharhino acts as an External Node Classifier to Puppet servers in a orcharhino deployment.

Note that the External Node Classifier will be removed in the next orcharhino version.

- Facter

-

A program that provides information (facts) about the system on which it is run; for example, Facter can report total memory, operating system version, architecture, and more. Puppet modules enable specific configurations based on host data gathered by Facter.

- Facts

-

Host parameters such as total memory, operating system version, or architecture. Facts are reported by Facter and used by Puppet.

- Foreman

-

The component mainly responsible for provisioning and content life cycle management.

- orcharhino services

-

A set of services that orcharhino Server and orcharhino Proxies use for operation. You can use the

foreman-maintaintool to manage these services. To see the full list of services, enter theforeman-maintain service listcommand on the machine where orcharhino or orcharhino Proxy is installed.

- Foreman Hook

-

An executable that is automatically triggered when an orchestration event occurs, such as when a host is created or when provisioning of a host has completed.

- Full Host Image

-

A boot disk used for PXE-less provisioning of a specific host. The full host image contains an embedded Linux kernel and init RAM disk of the associated operating system installer.

- Generic Image

-

A boot disk for PXE-less provisioning that is not tied to a specific host. The generic image sends the host’s MAC address to orcharhino Server, which matches it against the host entry.

- Hammer

-

A command line tool for managing orcharhino. You can execute Hammer commands from the command line or utilize them in scripts. Hammer also provides an interactive shell.

- Host

-

Refers to any system, either physical or virtual, that orcharhino manages.

- Host Collection

-

A user defined group of one or more Hosts used for bulk actions such as errata installation.

- Host Group

-

A template for building a host. Host groups hold shared parameters, such as subnet or life cycle environment, that are inherited by host group members. Host groups can be nested to create a hierarchical structure.

- Host Image

-

A boot disk used for PXE-less provisioning of a specific host. The host image only contains the boot files necessary to access the installation media on orcharhino Server.

- Incremental Upgrade (of a Content View)

-

The act of creating a new (minor) content view version in a life cycle environment. Incremental upgrades provide a way to make in-place modification of an already published content view. Useful for rapid updates, for example when applying security errata.

- Job

-

A command executed remotely on a host from orcharhino Server. Every job is defined in a job template.

- Job Template

-

Defines properties of a job.

- Katello

-

A Foreman plug-in responsible for subscription and repository management.

- Lazy Sync

-

The ability to change a

yumrepository’s default download policy of Immediate to On Demand. The On Demand setting saves storage space and synchronization time by only downloading the packages when requested by a client.

- Location

-

A collection of default settings that represent a physical place.

- Library

-

A container for content from all synchronized repositories on orcharhino Server. Libraries exist by default for each organization as the root of every life cycle environment path and the source of content for every content view.

- Life Cycle Environment

-

A container for content view versions consumed by the content hosts. A Life Cycle Environment represents a step in the life cycle environment path. Content moves through life cycle environments by publishing and promoting content views.

- Life Cycle Environment Path

-

A sequence of life cycle environments through which the content views are promoted. You can promote a content view through a typical promotion path; for example, from development to test to production.

- Manifest (Red Hat Subscription Manifest)

-

A mechanism for transferring subscriptions from the Red Hat Customer Portal to orcharhino. Do not confuse with Puppet Manifest.

- OpenSCAP

-

A project implementing security compliance auditing according to the Security Content Automation Protocol (SCAP). OpenSCAP is integrated in orcharhino to provide compliance auditing for managed hosts.

- Organization

-

An isolated collection of systems, content, and other functionality within a orcharhino deployment.

- Parameter

-

Defines the behavior of orcharhino components during provisioning. Depending on the parameter scope, we distinguish between global, domain, host group, and host parameters. Depending on the parameter complexity, we distinguish between simple parameters (key-value pair) and smart parameters (conditional arguments, validation, overrides).

- Parametrized Class (Smart Class Parameter)

-

A parameter created by importing a class from Puppet server.

- Permission

-

Defines an action related to a selected part of orcharhino infrastructure (resource type). Each resource type is associated with a set of permissions, for example the Architecture resource type has the following permissions: view_architectures, create_architectures, edit_architectures, and destroy_architectures. You can group permissions into roles and associate them with users or user groups.

- Product

-

A collection of content repositories. Products are either provided by Red Hat CDN or created by the orcharhino administrator to group custom repositories.

- Promote (a Content View)

-

The act of moving a content view from one life cycle environment to another.

- Provisioning Template

-

Defines host provisioning settings. Provisioning templates can be associated with host groups, life cycle environments, or operating systems.

- Publish (a Content View)

-

The act of making a content view version available in a life cycle environment and usable by hosts.

- Pulp

-

A service within Katello responsible for repository and content management.

- Pulp Mirror

-

A orcharhino Proxy component that mirrors content.

- Puppet

-

The configuration management component of orcharhino.

- Puppet Agent

-

A service running on a host that applies configuration changes to that host.

- Puppet Environment

-

An isolated set of Puppet Agent nodes that can be associated with a specific set of Puppet Modules.

- Puppet Manifest

-

Refers to Puppet scripts, which are files with the .pp extension. The files contain code to define a set of necessary resources, such as packages, services, files, users and groups, and so on, using a set of key-value pairs for their attributes.

Do not confuse with Manifest (Red Hat Subscription Manifest).

- Puppet Server

-

A orcharhino Proxy component that provides Puppet Manifests to hosts for execution by the Puppet Agent.

- Puppet Module

-

A self-contained bundle of code (Puppet Manifests) and data (facts) that you can use to manage resources such as users, files, and services.

- Recurring Logic

-

A job executed automatically according to a schedule. In the orcharhino management UI, you can view those jobs under Monitor > Recurring logics.

- Registry

-

An archive of container images. orcharhino supports importing images from local and external registries. orcharhino itself can act as an image registry for hosts. However, hosts cannot push changes back to the registry.

- Repository

-

Provides storage for a collection of content.

- Resource Type

-

Refers to a part of orcharhino infrastructure, for example host, capsule, or architecture. Used in permission filtering.

- Role

-

Specifies a collection of permissions that are applied to a set of resources, such as hosts. Roles can be assigned to users and user groups. orcharhino provides a number of predefined roles.

- SCAP content

-

A file containing the configuration and security baseline against which hosts are checked. Used in compliance policies.

- Scenario

-

A set of predefined settings for the orcharhino CLI installer. Scenario defines the type of installation, for example to install orcharhino Proxy execute

orcharhino-installer --no-enable-foreman. Every scenario has its own answer file to store the scenario settings.

- Smart Proxy

-

A orcharhino Proxy component that can integrate with external services, such as DNS or DHCP. In upstream Foreman terminology, Smart Proxy is a synonym of orcharhino Proxy.

- Standard Operating Environment (SOE)

-

A controlled version of the operating system on which applications are deployed.

- Subnet Image

-

A type of generic image for PXE-less provisioning that communicates through orcharhino Proxy.

- Subscription

-

An entitlement for receiving content and service from Red Hat.

- Synchronization

-

Refers to mirroring content from external resources into the orcharhino Library.

- Synchronization Plan

-

Provides scheduled execution of content synchronization.

- Task

-

A background process executed on the orcharhino or orcharhino Proxy, such as repository synchronization or content view publishing. You can monitor the task status in the orcharhino management UI under Monitor > Tasks.

- Trend

-

A means of tracking changes in specific parts of orcharhino infrastructure. Configure trends in orcharhino management UI under Monitor > Trends.

- User Group

-

A collection of roles which can be assigned to a collection of users.

- User

-

Anyone registered to use orcharhino. Authentication and authorization is possible through built-in logic, through external resources (LDAP, Identity Management, or Active Directory), or with Kerberos.

- virt-who

-

An agent for retrieving IDs of virtual machines from the hypervisor. When used with orcharhino, virt-who reports those IDs to orcharhino Server so that it can provide subscriptions for hosts provisioned on virtual machines.